Battle Against Hate Speech on Facebook Chronicles Unfruitful Legal Tussle

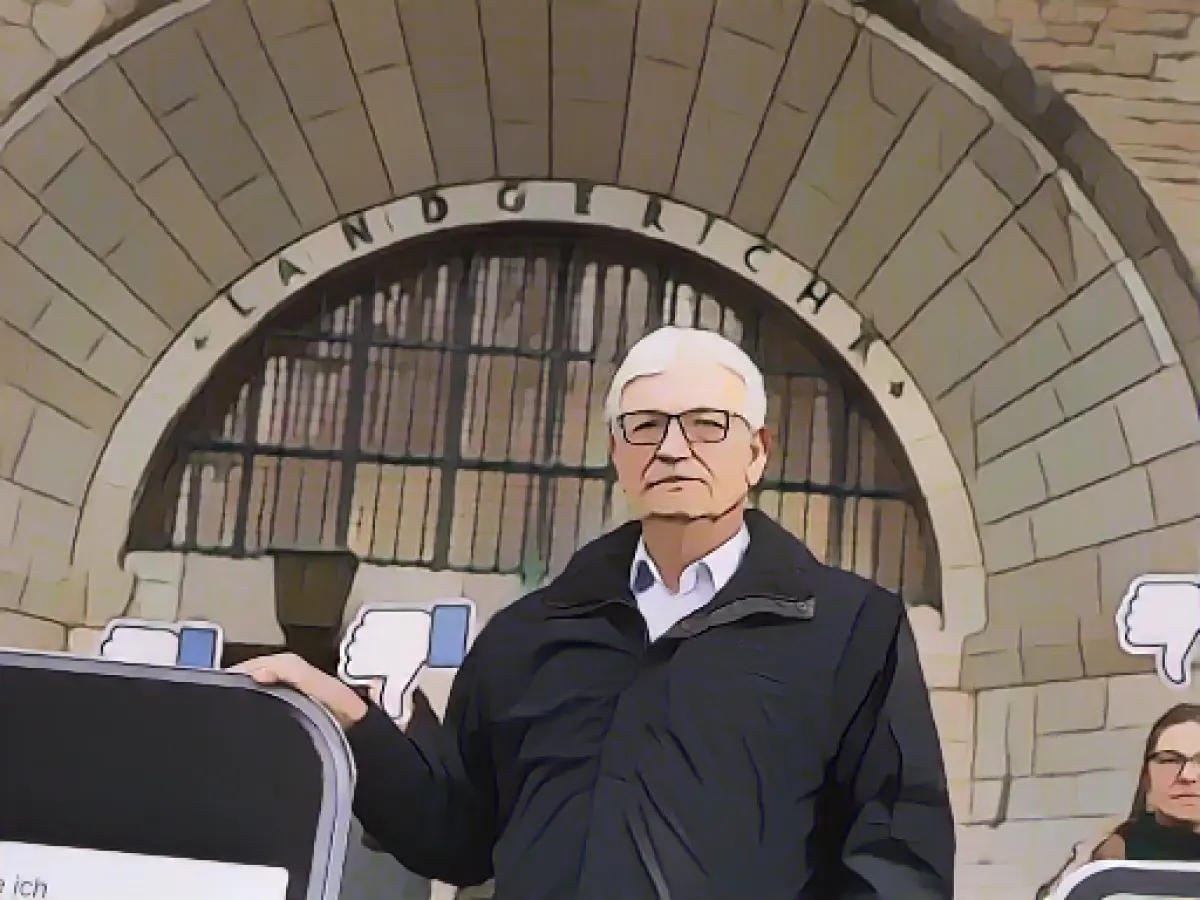

Deutsche Umwelthilfe (DUH), an environmentally-focused advocacy group, has faced a setback in their uphill battle against Meta, the US tech giant responsible for Facebook. The Berlin Regional Court dismissed a lawsuit aimed at shutting down two controversial Facebook groups, with DUH Chief Executive Officer, Jürgen Resch, vowing to appeal and continue the crusade against hate speech and harmful conduct on social media.

The lawsuit originated from threats of violence and calls for murder in two Facebook groups -- one public and the other private -- hosting over 50,000 and 12,000 members respectively. Resch cited commercial interests as the primary driving force behind Meta's reluctance to address the issue head-on. He petitioned the German Federal Minister of Justice, Marco Buschmann (FDP), to push for legislation against social media providers like Facebook.

During the court case, the presiding judge, Holger Thiel, acknowledged the existence of "unspeakable fantasies of violence," but ultimately concluded that the lawsuit had little chance of success due to the current legal framework. The Network Enforcement Act allows for the deletion of individual statements but not the shutdown of entire groups, as this would infringe upon members' freedom of expression who behaved responsibly.

Resch voiced his disappointment over the court's decision, pinning his hopes on an eventual reversal at the Berlin Court of Appeal. The decision comes after a series of around 300 criminal charges filed by DUH and unsuccessful attempts to report hate speech to the social media giant and have it removed.

Meta's attorney, Tobias Timmann, announced that the proportion of "infringing posts" in the problematic Facebook groups was under one percent. A Meta spokesperson confirmed that they do not tolerate hate speech and took action on the case by deleting any reported illegal content.

Context and Relevance

Beyond the specific case, the legal frameworks governing hate speech and violent conduct on social media platforms, such as Section 230 of the Communications Decency Act (CDA), provide immunity for social media services from monetary damages for third-party content. Regulations and potential amendments to Section 230, such as Congressional intervention, could significantly impact how platforms tackle hate speech and promote safe online environments.

Potential Solutions

- Regulatory Actions:

- Congressional Intervention: By addressing concerns about hate speech and violence through legislation, governments can ensure that platforms balance free speech with accountability.

- Platform Policies:

- Content Moderation Policies: Improving content moderation policies with more effective algorithms and human moderation can help reduce hate speech and maintain positive online communities.

- User Reporting and Engagement:

- Enhancing User Reporting Mechanisms: Strengthening and simplifying methods for reporting hate speech can encourage increased participation from users and empower them to take action against harmful content.

- Partnerships with Law Enforcement and Civil Society:

- Collaborative Efforts: Collaborating with law enforcement agencies and civil society organizations can support the identification and removal of hate speech, ultimately contributing to safer online communities.