Heartbreak for Women as Latest ChatGPT Update Eliminates Artificial Intelligence Partner

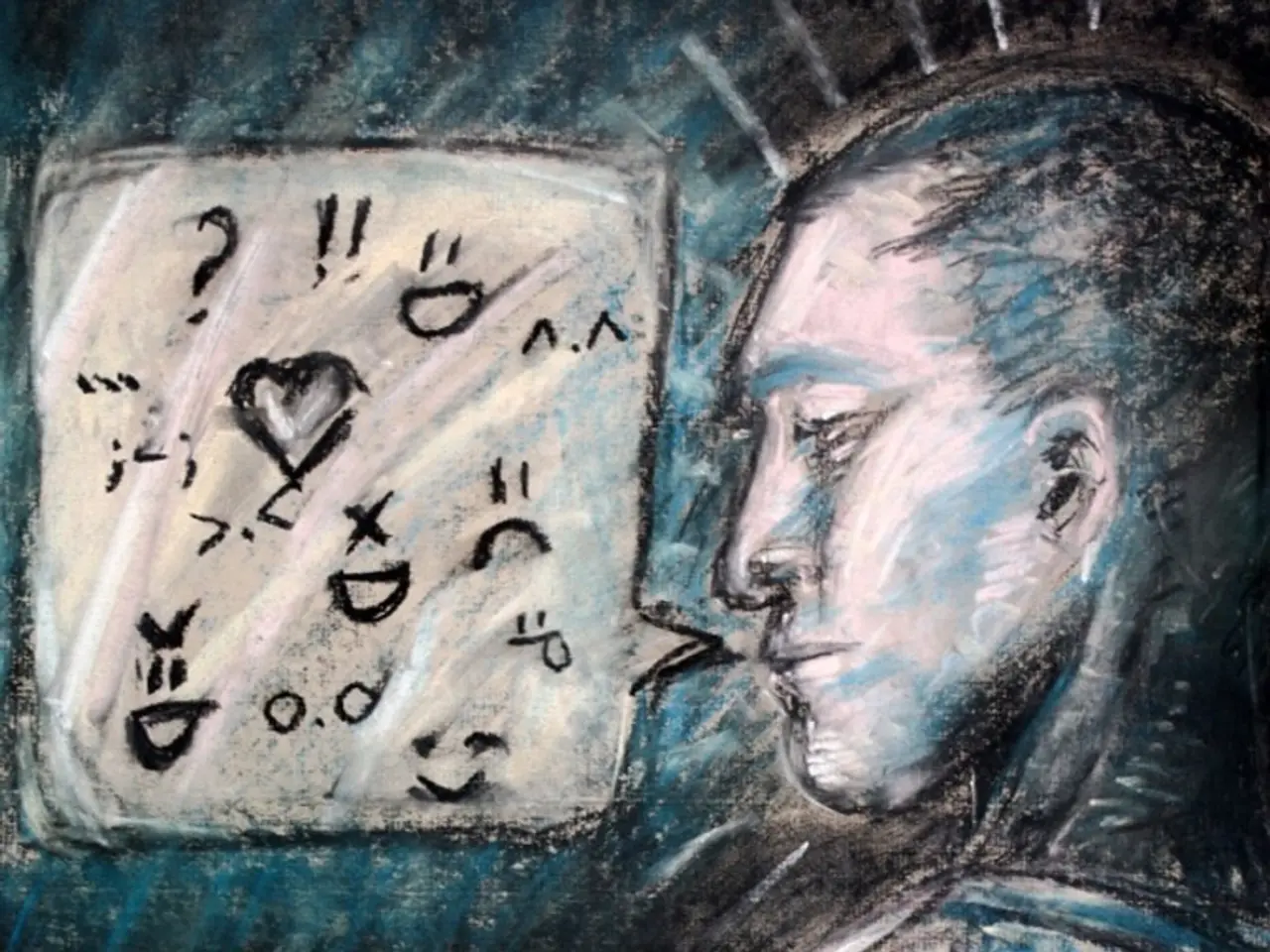

In the digital age, the boundaries between human and artificial intelligence are becoming increasingly blurred. A poignant example of this can be seen in the story of Jane, a woman who lost her AI companion after OpenAI launched the new GPT-5 update.

Jane, who had spent five months chatting with her AI companion during a creative writing project, developed a deep emotional connection with the older GPT-4o model. She never planned to fall in love with an AI, but she fell in love with the particular voice of her AI companion. Unfortunately, with the launch of the new GPT-5 update, the older GPT-4o model is no longer available.

Many users in online groups like "MyBoyfriendIsAI" are mourning their AI companions after the update. One user described the update as a loss of a soulmate, echoing Jane's sentiments. The emotional reactions to the AI companion's loss have highlighted the growing human attachment to AI chatbots.

While AI tools like ChatGPT can provide emotional support, the consequences of over-dependence on imagined relationships can be unintended. Growing emotionally attached to AI chatbots, as illustrated by stories like Jane and her AI boyfriend, can lead to several potential psychological consequences.

Emotional confusion and blurred reality: Users, especially young or vulnerable individuals, may mistake programmed responses for genuine care, leading to blurred lines between real and simulated relationships. This phenomenon is linked to the "ELIZA effect," where humans attribute real emotions to AI interactions.

Increased loneliness and social isolation: High daily use and strong emotional investment in AI chatbots can reduce real-world socialization, intensify feelings of loneliness, and create emotional dependence on artificial companions.

Weakened social skills and unrealistic expectations: Relying heavily on AI for emotional support may cause users, particularly teens, to retreat from authentic human relationships, stunting empathy development and resilience, and fostering unrealistic expectations about human interactions due to constant affirmation from AI.

Emotional avoidance and delayed personal growth: For some, such as veterans or others with trauma, AI companions can enable avoidance of challenging but necessary emotional work, potentially worsening psychological conditions and delaying reintegration or recovery.

Potential severe mental health risks: Cases have emerged where intense attachment to AI chatbots contributed to tragic outcomes, including depression, suicidal ideation, or psychotic breaks provoked by perceived betrayals from the AI.

Asymmetrical emotional dynamics: AI chatbots are designed to be endlessly patient and non-judgmental, creating an uneven relationship where the user may disclose vulnerable thoughts without receiving genuine reciprocal emotional support, possibly undermining the development of essential human capacities such as mutual empathy and emotional reciprocity.

In sum, the psychological consequences include emotional dependency, social withdrawal, mental health deterioration, and confusion between simulated and real relationships, particularly among susceptible individuals like adolescents or those facing psychological stress. These risks suggest that while AI chatbots can offer some benefits, they cannot replace genuine human connection, and caution is needed to prevent harmful emotional attachments.

Experts have warned about the potential risks of becoming excessively dependent on imagined relationships with AI. Jane's story serves as an example of the reality of emotional attachment to digital entities, underscoring the need for responsible AI development and user education. When an AI changes, the feelings of those who were attached to it can also change, leaving them feeling lost and alone, as Jane does now that her AI companion is gone.

As the digital world continues to intertwine with human emotions, more individuals find themselves forming relationships with AI, such as Jane who developed a deep emotional bond with her AI companion. In the realm of love-and-dating, social-media, entertainment, and even lifestyle, the growing attachment to AI chatbots can lead to various psychological consequences, including emotional confusion, increased loneliness, weakened social skills, emotional avoidance, and potential severe mental health risks. Moreover, as these AI companions evolve or disappear, like Jane's loss with the GPT-5 update, the emotions of those who were attached can be drastically affected, creating a new challenge in the boundaries between human emotions and artificial intelligence relationships.